|

|

|

Introduction

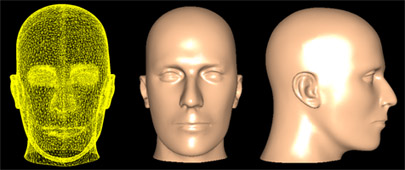

Method Overview

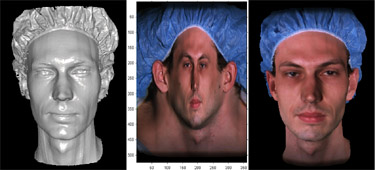

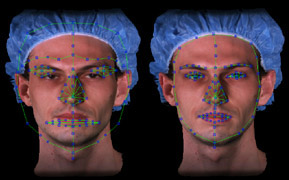

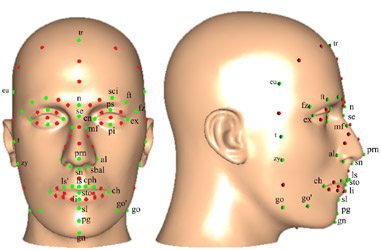

Face Data and Preprocessing

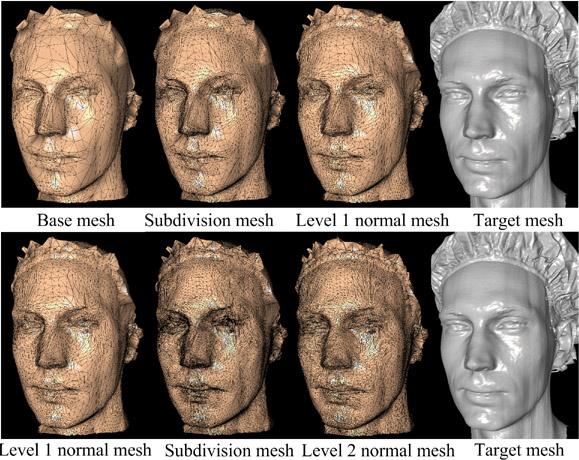

Model Fitting

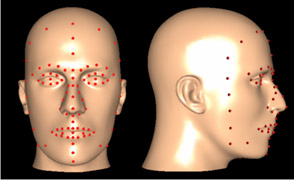

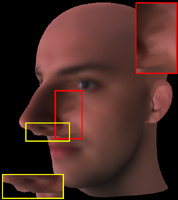

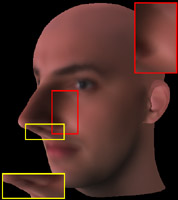

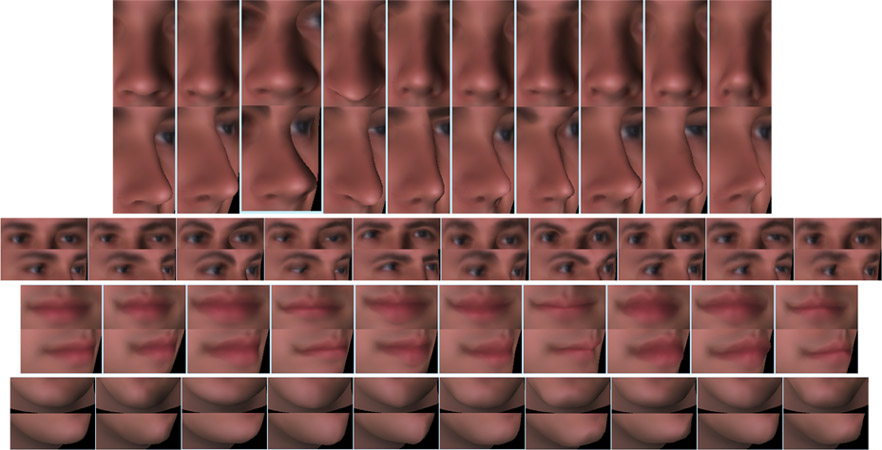

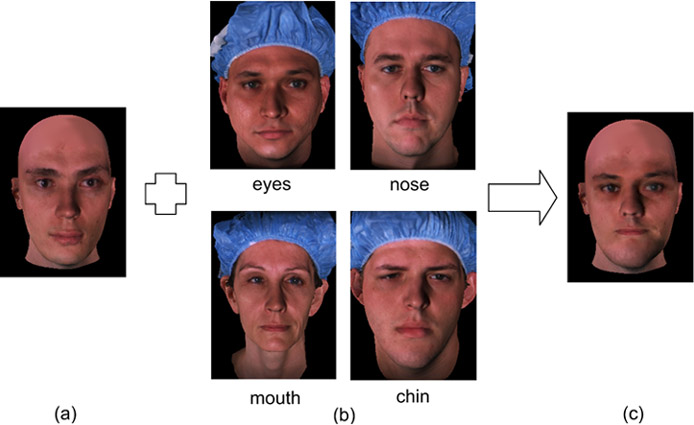

Region-Based Face Shape Synthesis

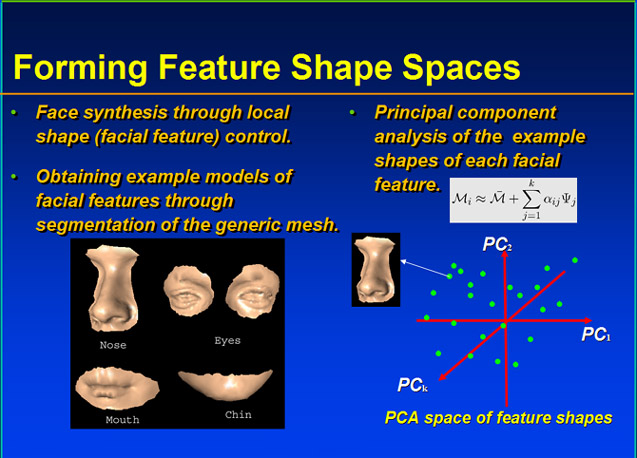

Forming Local Shape Spaces

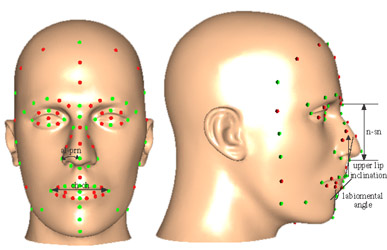

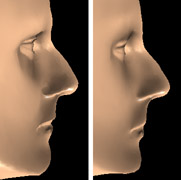

Anthropometric Parameters

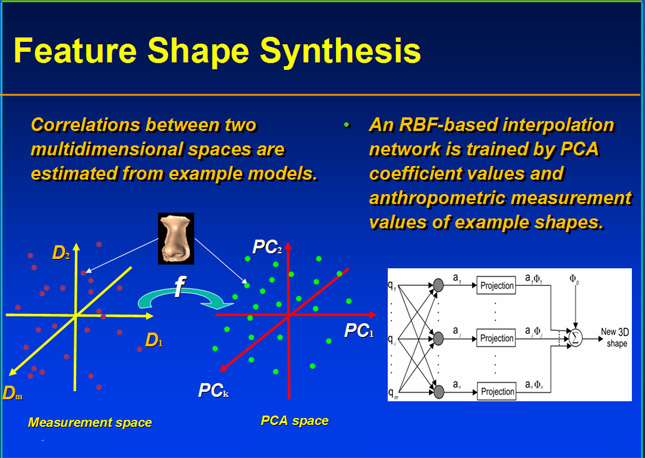

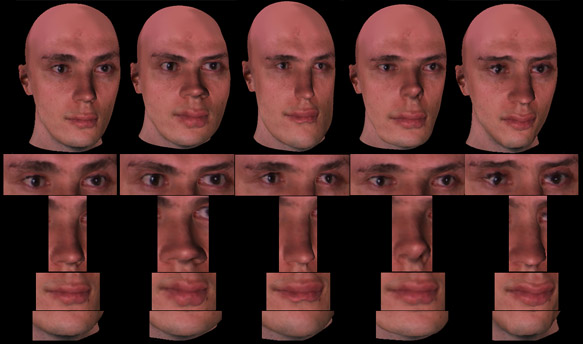

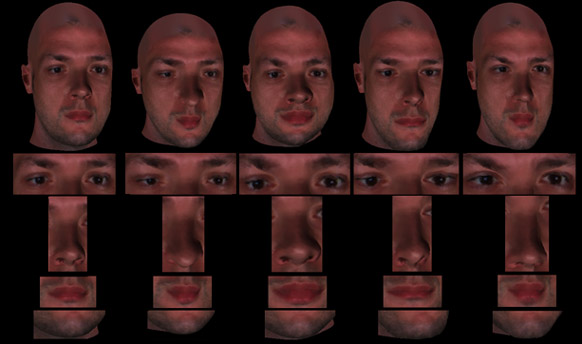

Feature Shape Synthesis

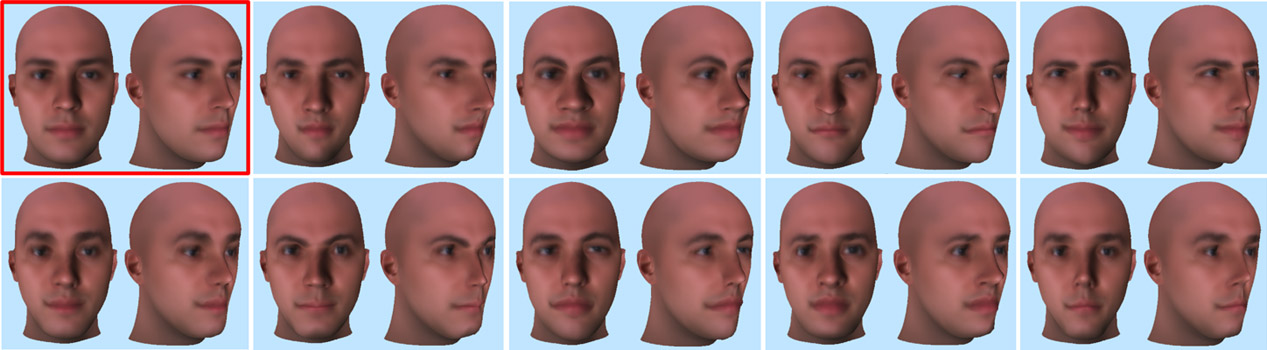

Results

Other Applications

Copyright 2005-2013, Yu

Zhang.

This material may not be published, modified or otherwise

redistributed in whole or part without prior approval.