Would you like to make this site your homepage? It's fast and easy...

Yes, Please make this my home page!

Anatomy-based 3D Face Modeling

Introduction

Within computer graphics, 3D face modeling and animation has long fascinated computer graphics researchers, not only for the ubiquity of faces in the real world, but also for the inherent problems in creating surface deformations to express certain behaviors. Applications of facial simulations have greatly increased with continuing advances in computing power, display technology, graphics and image capture and interfaceing tools. There are some areas where a good model of human face is essential for success. For example, a good 3D facial model could be used in such applications as character animation in entertainment industry and advertising, low-bandwidth teleconferencing, advanced human-computer interfaces, computer synthetic facial surgery, realistic human representation in the virtual reality space, visual speech synthesis for the deaf and hard of hearing, face and facial expression recognition, and models for the analysis of facial expression by psychologists of non-verbal communication. The common challenge has been to develop facial models that not only look real, but which are also capable of synthesizing the various nuances of facial motion quickly and accurately.

However, the face is endowed with a complex anatomical structure;

there are a multitude of subtle expressional variations on the

face; moreover, we as humans have an uncanny sensitivity to facial

appearance. Due to these factors, until now, synthesizing

realistic facial animation is still a tedious and difficult task. In order to tackle this challenge, two different aspects, namely

the structure modeling and the deformation

modeling, have to be addressed: The structure modeling defines

the shape and features of a face and models the interior facial

details such as tissue, muscles and bone. An ultimate objective of

it is to model human facial anatomy exactly including its

movements to satisfy both structure and functional aspects of

simulation. The deformation modeling, on the other hand, deals

with the deformation of the face to generate various dynamic

effects for intelligible reproduction of facial expressions.

Because the face is highly deformable, particularly around the forehead, eyes and mouth, and these deformations convey a great deal of meaningful information. We believe that a good foundation for face modeling and animation is the anatomy of the face, especially the arrangements and actions of the primary facial muscles. To develop a detailed 3D model of the human face and its musculature based on the facial anatomy and then simulate the subtle nuances of facial expression is more promising than purely geometric face models which ignore the fact that the human face is an elaborate biomechanical system. As the model simulates mechanical properties of the skin and underlying muscles, it extends the range of possible facial deformation to simulate various expressions. Since the model has a structure comparable to the real human face, it is also possible to develop a limited number of intuitive parameters to control facial movement with relatively little effort. Moreover, modeling of the skin and muscle could be independent of facial topology, thus it is a general approach for synthesis of various expressions and is applicable to different face models. Therefore, our face model will take what we believe to be a fundamentally superior, anatomy-based approach to synthesis the many subtleties of facial tissue deformation under the dynamic action of the inner facial structures. With such an anatomy-based model, the mechanism of synthesizing facial expressions would be very close to the actual one in the human face and the dynamic synthesis of a wide range of realistic expressions in an efficient way would be achieved.

Objective

The objective of this project is to develop a realistic 3D facial model sophisticated enough to accurately reproduce the various nuances of facial structure and motion. Such a model should be capable of controlled non-rigid deformations of various facial regions, in a fashion similar to how humans generate facial expressions by muscle actuation attached to facial tissue. The realistic facial expressions should be dynamically synthesized and the animation process can be executed in an acceptable time for interactive applications.

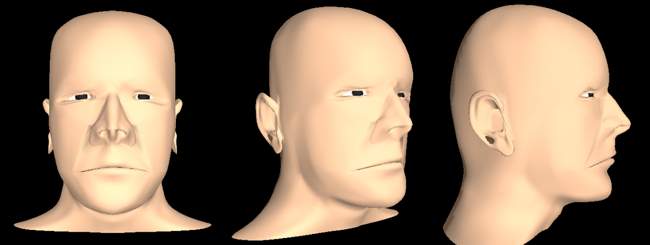

An anatomy-based face model with a multi-layer structure

As the final facial animation is directly related to the model structure, we believe that we can obtain more realistic results if we apply the same principles used in nature. For this reason, we propose a new 3D face model that conforms to the human anatomy for realistic and fast facial expression synthesis. We analyze the real human face to construct a face model with a hierarchical biomechanical structure, incorporating a physically-based approximation to facial skin tissue, a set of anatomically-motivated facial muscle actuators and underlying rigid skull. Such hierarchical model structure enables us to generate facial expressions using the mechanism that is very close to the actual one in the human face. Our efforts have been devoted to the modeling of each anatomical component.

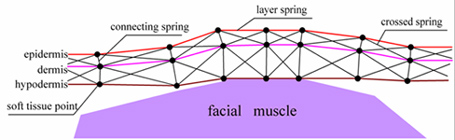

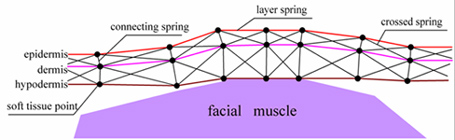

Multi-layer Deformable Skin Model

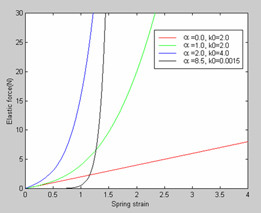

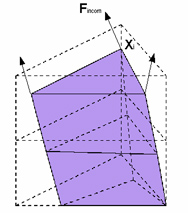

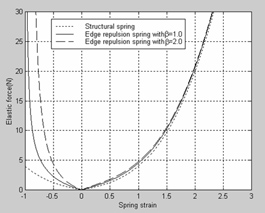

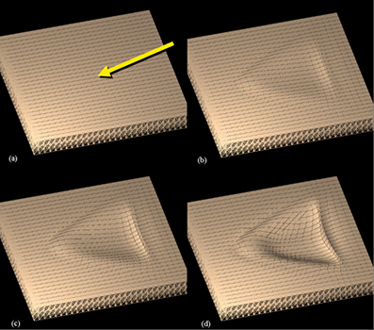

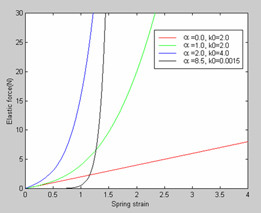

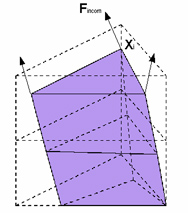

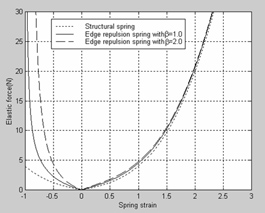

The biomechanical soft tissue model considers the feature that skin is a nonhomogenous deformable object consisting of different layers. It employs a multi-layer structure constituted of different spring sets to simulate the dynamic behavior of different types of the soft tissue. In the skin model, each tissue layer is represented by its own visco-elastic mesh. The entire multi-layer soft tissue structure is a collection of meshes augmented by spatial relations specifying the surfaces in contact. The skin model has been developed to simulate many of the aspects of the mechanical behavior of facial tissue. Particularly, it takes into account the nonlinear stress-strain relationship of the skin and the fact that soft tissue is almost incompressible due to its liquid components. Instead of approximating nonlinear behavior of soft tissue by linear springs, a new kind of nonlinear spring called structural spring is developed to directly simulate the nonlinear dynamic facial skin deformation under muscles' contraction. The incompressibility of the skin is also considered and a constraint force is used to penalize volume variation. Our skin model is also robust thanks to a new type of nonlinear spring called face repulsion spring. It extends the nonlinear nature of the structural spring and applies additional pressure force to the skin nodes during skin deformation. It effectively preserves the prismatic element structure of the skin model in the dynamic simulation without a heavy computational cost.

Consistent multi-layer facial skin model.

Left: stress-strain relationship of the structure spring with different parameter values. Center: penalizing the volume variation. Right: Magnitude of the force generated by the structural springs and

edge repulsion springs.

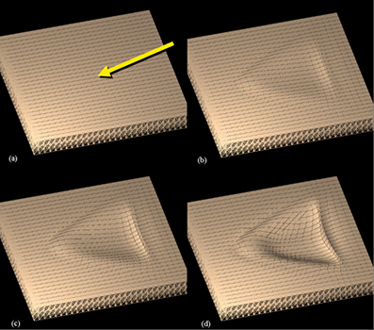

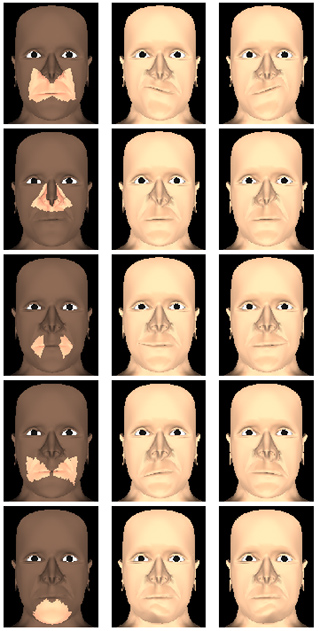

Dynamic deformation of the multi-layer soft tissue under the muscle force.

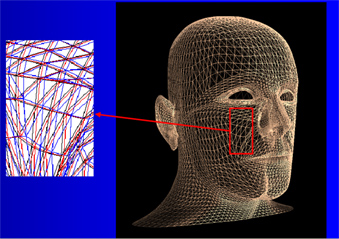

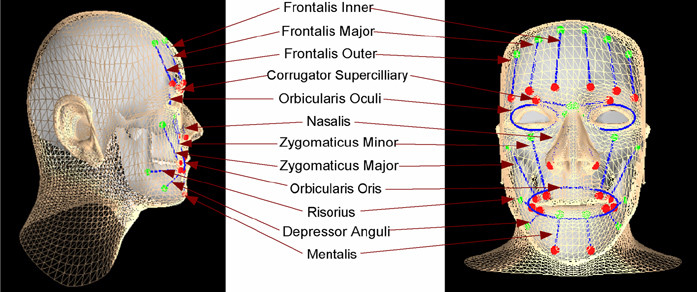

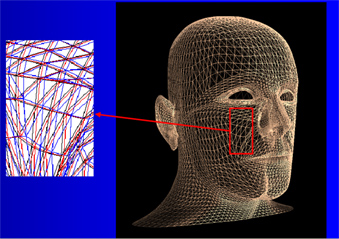

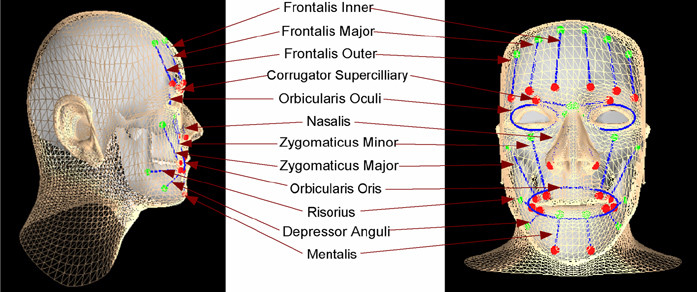

Modeling of Facial Muscles

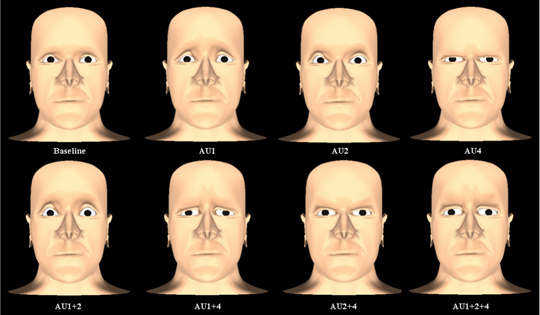

We develop a muscle process which includes different kinds of force-based facial muscle models to simulate facial muscle contraction. Instead of using complex volumetric models, our muscle models simulate the distribution of the muscle force exerted on the facial skin. The magnitude of muscle forces exerted on the skin is nonuniform according to the relative position of the skin point to the central muscle fiber. Like real human face, the force-based muscles models cause the dynamic motion of the skin in response to the muscles' contraction, thus can lead to more flexible animation. To synthesize facial expressions, we make an important hypothesis about it, namely, that any facial expression can be viewed as a weighted combination of the contraction of a set of typical facial muscles. The mapping of the desired facial expression into facial muscle activation is achieved by using the FACS (Facial Action Coding System). By employing a simplified form of FACS, the muscles are grouped by their positions and act with connected facial skin in harmony.

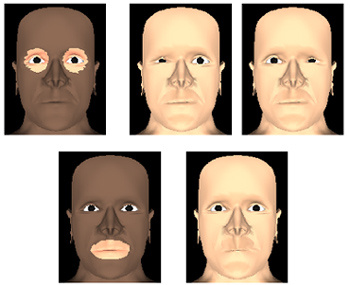

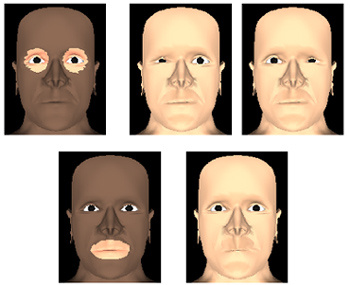

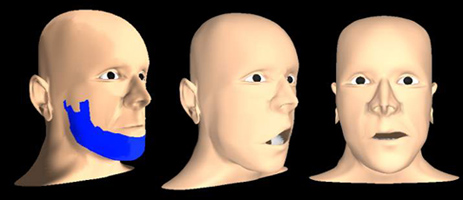

Muscle structure in the face model.

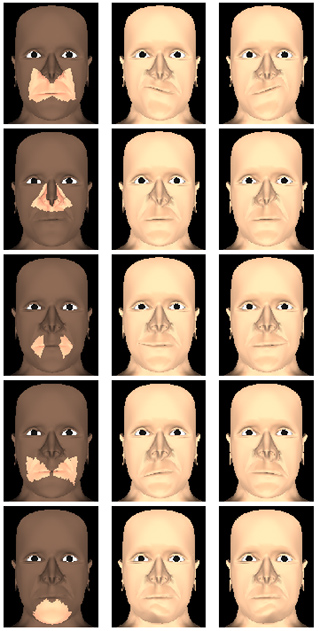

Contraction of different individual facial muscles causes local facial deformation.

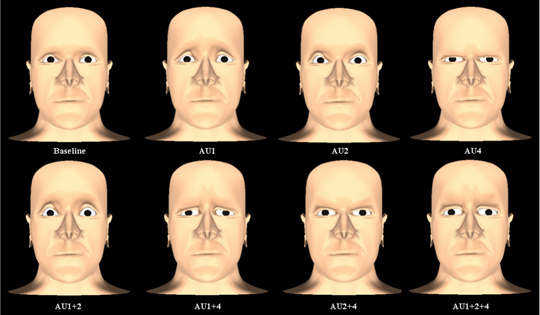

Different AUs for the eyebrow and forehead identified by the FACS.

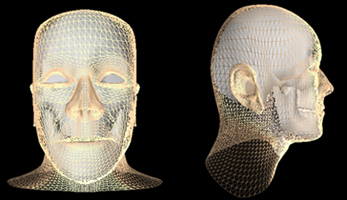

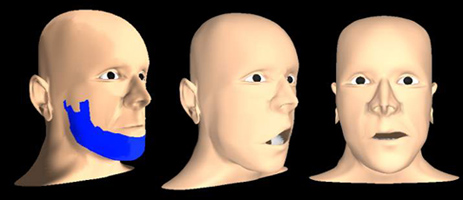

Skull and Jaw

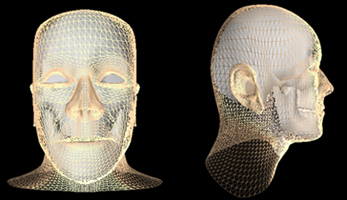

The 3D facial model incorporates a skull structure. We use a generic skull model and adapt it to the reconstructed facial skin mesh. By taking into account of the shape of a skull, we can realize the motion of a mouth caused by the lower jaw and express the facial skin deformation under the constraint of the skull's shape. Moreover, the skull model allows us to set the facial muscles in accurate positions, which connect the skull and skin like in an actual face. To efficiently construct facial muscles in the face, we developed a muscle mapping approach which ensures the muscles to be automatically located at the anatomically correct positions between the skin and skull layers. New degrees of freedom for animation are easily introduced into the model by adding new muscle with this approach.

Skull template fitted to the facial skin mesh and articulation of the jaw.

Computational Model for Dynamic Simulation

To simulate the dynamics of the facial skin, the system of coupled second-order ordinary differential equations is numerically integrated through time. Since force evaluations are time-consuming, the integration technique should try to minimize them, avoiding the use of exceedingly small simulation timesteps and requiring a small number of force evaluations per step. Generally, the numerical integration methods are categorized into two classes: implicit integration and explicit integration. Despite its ease of implementation, the explicit schemes require an integration time step which must be inversely proportional to the square root of the stiffness. In practice, it requires small time steps to stably advance the simulation forward in time. Otherwise, the system will blow up rapidly since forces over too large a time step may often induce a wild change in position. While implicit techniques are generally more stable and permit larger stepsizes, the time spent in the construction and solution of linear systems of equations is too big to meet the high simulation rate criterion. We developed a semi-implicit integration method which finds a compromise between the stability of an implicit method and the simplicity and density of an explicit iteration.

Interactive Simulation Environment

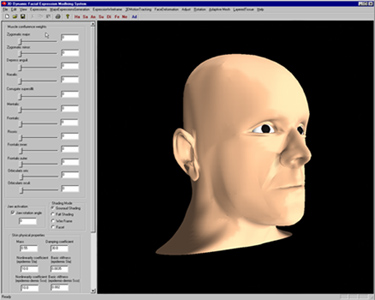

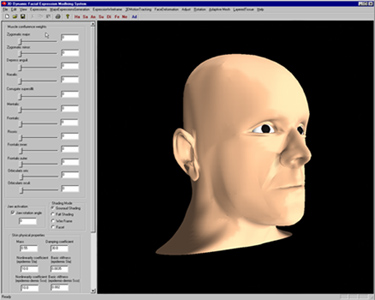

Since facial actions relative to typical expressions are parameterized based on anatomical analysis, we develop a windows GUI to facilitate the interactive user control. For the interactive specification of parameters, the user can decide on the following: contraction rate of muscles, jaw action parameters, skin property parameters (mass, basic spring stiffness, nonlinearity factor and damping coefficient), face rigid movement parameters and shading modes. Since the parametric values of the skin property are set the same for simulating different facial expressions, adjusting this kind of parameters is done only once. The parameters that need to be specified to generate each facial expression are muscle contraction rate and jaw action parameters. By binary searching of their values based on the visual feedback, the parameters for generating a desirable facial expression can be calibrated in a short time.

GUI of our 3D face modeling and animation system.

Facial Animation

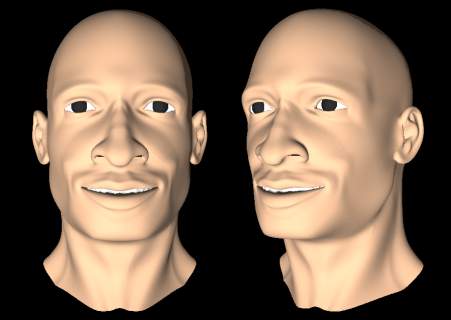

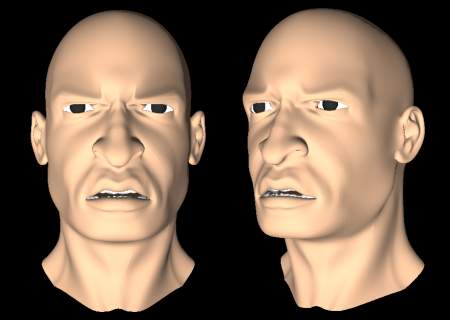

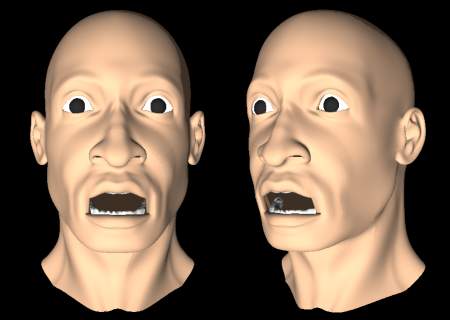

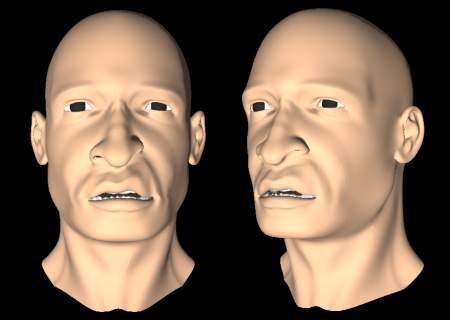

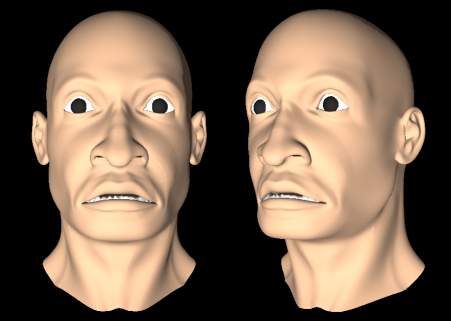

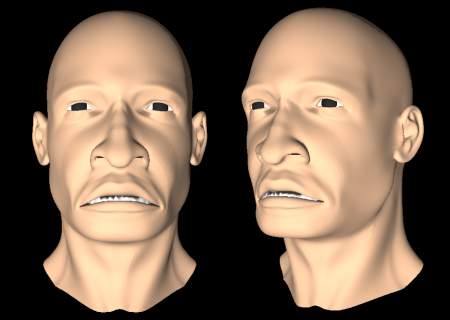

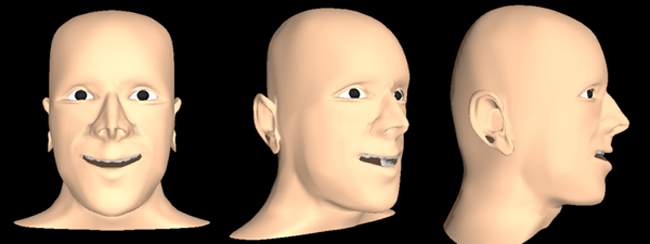

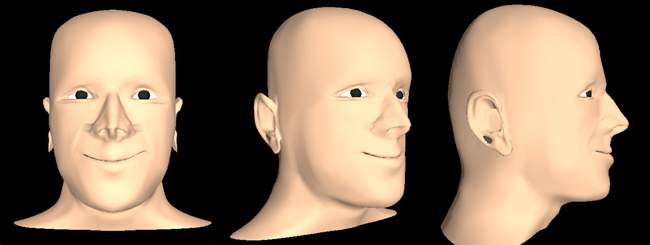

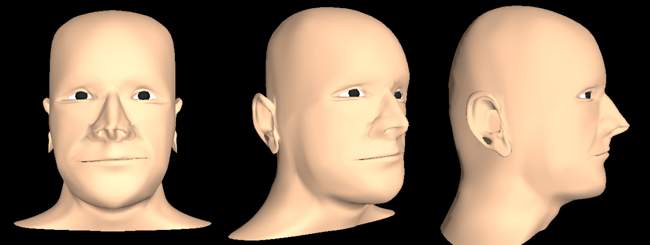

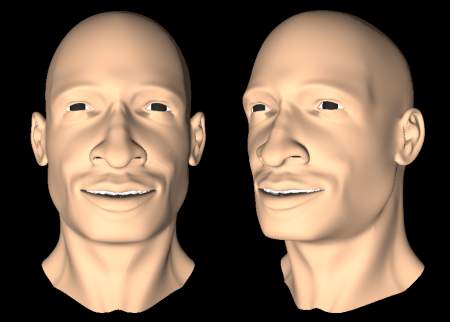

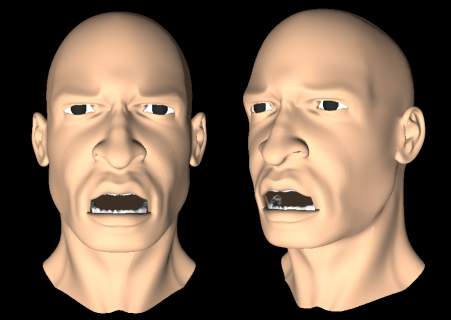

- Primary facial expressions synthesized on the anatomy-based model:

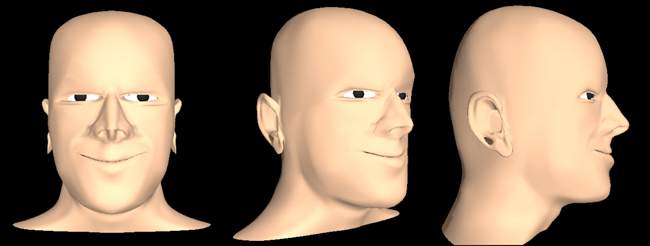

- Varitions of the primary expressions synthesized on the models:

Papers:

- Yu Zhang, Edmond C. Prakash and Eric Sung. "HFSM: Hierarchical Facial shape modeling algorithm for realistic facial expression animation". International Journal of Shape Modeling, 9(1): 101-135, 2003.

- Yu Zhang, Edmond C. Prakash and Eric Sung. "Efficient modeling of an anatomy-based face and fast 3D facial expression synthesis". Computer Graphics Forum, 22(2): 159-169, June 2003.

- Yu Zhang, Edmond C. Prakash and Eric Sung. "Anatomy-based 3D facial modeling for expression animation". Machine GRAPHICS & VISION Journal, 11(1): 53-76, 2002.

- Yu Zhang, Edmond C. Prakash and Eric Sung. "Animation of facial expressions by physical modeling". Eurographics2001, Short Presentations, pp. 335-345, Manchester, UK, Sept. 2001.

- Yu Zhang, Edmond C. Prakash and Eric Sung. "Real-time physically-based facial expression animation using mass-spring system". Proc. Computer Graphics International 2001 (CGI2001), IEEE Computer Society Press, Hong Kong, China, pp. 347-350, July 2001.

- Yu Zhang, Eric Sung and Edmond C. Prakash. "A Physically-based model for real-time facial expression animation". Proc. 3rd International Conference on 3D Digital Imaging and Modeling (3DIM2001), IEEE Computer Society Press, pp. 399-406, Quebec City, Canada, May 2001.

- Yu Zhang, Eric Sung and Edmond C. Prakash. "3D modeling of dynamic facial expressions for facial image analysis and synthesis". Proc. 14th International Conference on Vision Interface (VI2001), pp. 1-8, Ottawa, Canada, June 2001.

Copyright 2005-2013, Yu

Zhang.

This material may not be published, modified or otherwise

redistributed in whole or part without prior approval.